Streaming Platforms

Overview

The decentralisation of renewable energy production leads to many smaller generation plants that need to be managed. The number of data points is increasing massively, challenging the existing IT infrastructure. In addition, the growing number of volatile generation plants – such as photovoltaic (PV) systems and wind turbines – is creating a challenge in grid operation as unforeseen critical system states occur more frequently and require quick responses.

On a 24/7 basis, TSOs use a wide spectrum of data and data processing tools to make decisions:

- Central to a TSO decision is the security of electricity supply for the own grid area as well as the influence on neighbouring TSOs and underlying DSOs.

- A TSO needs to comply with stringent regulatory requirements about the overall electricity system reliability.

To enable the system operators, for the secure operation of the electrical grid, the existing IT infrastructure – mostly based on large monolithic systems with limited integration – needs to be adjusted for faster scaling and the ability to react to changes. The following guiding principles are proposed:

- For better integration and individual scaling, the functions of the previously monolithic or vertical applications should be split into flexibly combinable modules with a high rate of reusable features, moving into the underlying platform rather than being built multiple times in every single module.

- For the separation of concerns, any new module can be developed separately.

- For rapid scaling, an event-driven technology such as event streaming should be used as a foundation. By using a joined data model, all types of process and master data are integrated and made accessible to the different modules and functions.

To support the above, a streaming platform can be exploited, as an expansion of a common understanding of a technology that allows data to be sent over the internet to be used immediately rather than having to download it or use it only when broadcast.

Benefits

Benefits of event and data-driven technology

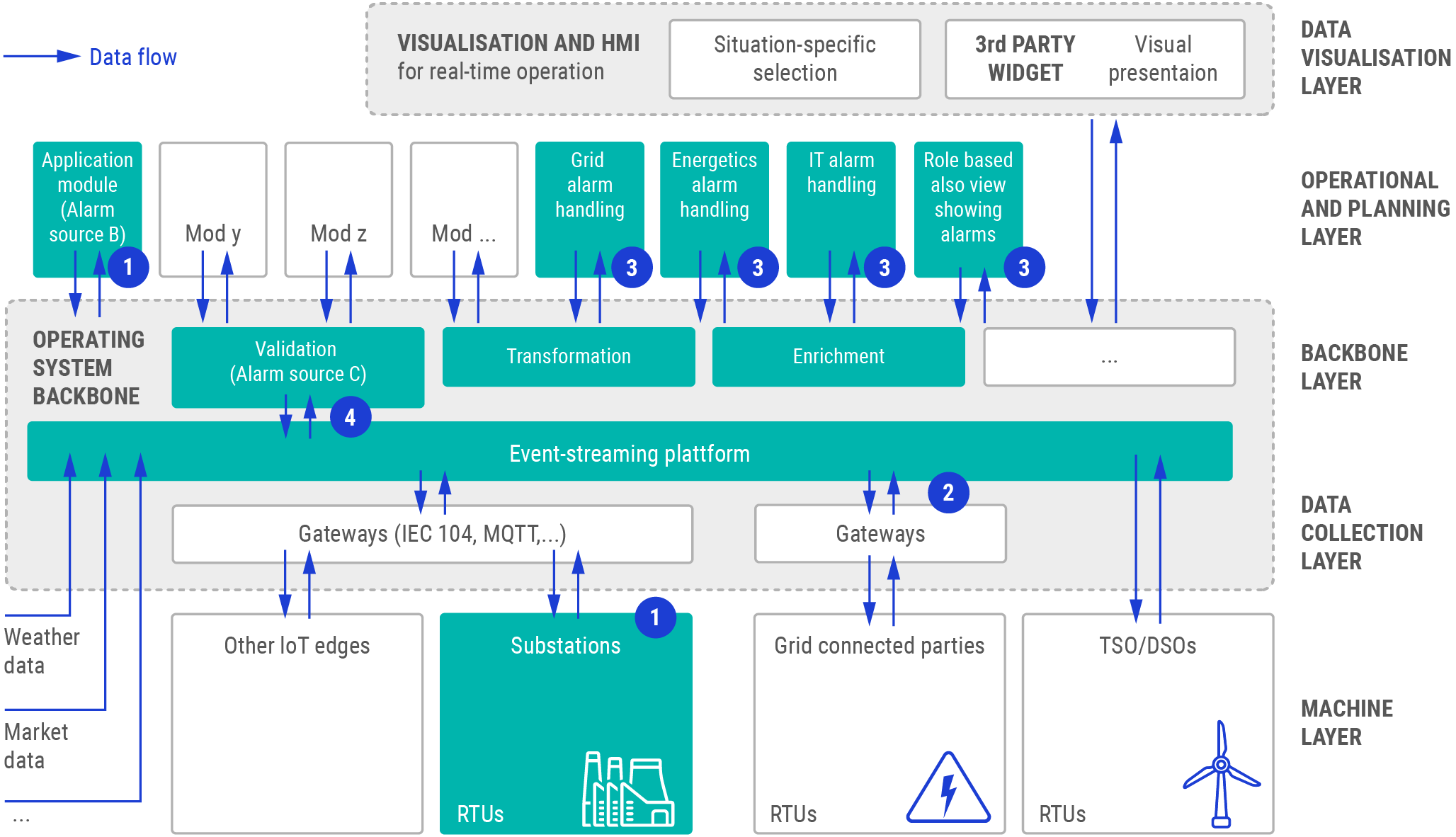

Event and data-driven stream processing is a programming paradigm: contrary to proprietary solutions, event streaming has events – such as incoming process data from changes in a switch state – at its core. In this way, the user is informed about every change in real time, giving them the most up-to-date picture of the current grid situation and therefore the longest possible time for decision-making and actions. Streaming platforms are available from vendors or as open-source software and have a wide range of benefits, especially for TSO-specific use cases:

- Enabling timely workflow decisions: event streaming makes relevant information available as soon as it occurs. Operators can make data-driven decisions without delays, which becomes more relevant when more renewable electricity producers are integrated into the grid. Dangerous situations can be solved when they arise, leading to a higher system and supply security.

- Handling large amounts of data: a data streaming architecture is designed to handle large amounts of data and scales depending on the current situation. This becomes increasingly important since the overall amount of data points grows in conjunction with the increase in production plants, the growing number of smart meters for demand side management, and the need for smaller measurement cycles and therefore more measurement values per time.

- Supporting decoupled, scalable architecture: an event streaming platform helps as a joined technological and data basis to support a modular system approach. In this way, all modules work with the same input data provided by the event streaming platform, leading to consistent results for decision-making.

Benefits of a modular system setup

A flexible system comprising combinable modules requires seamless integration of functions. This also means that all modules must be based on a uniform data model and provide the user with an integrated, uniform user interface (UI).

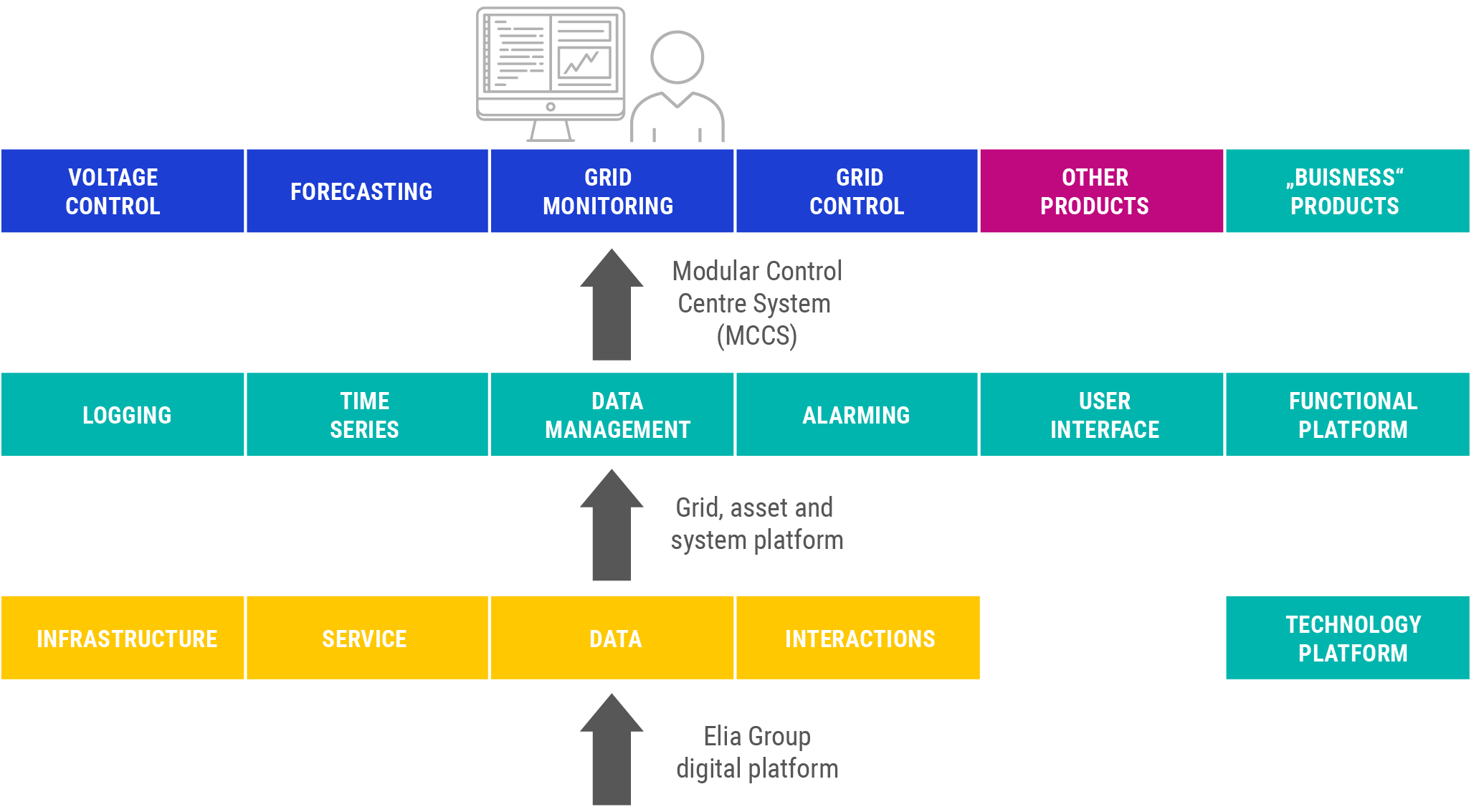

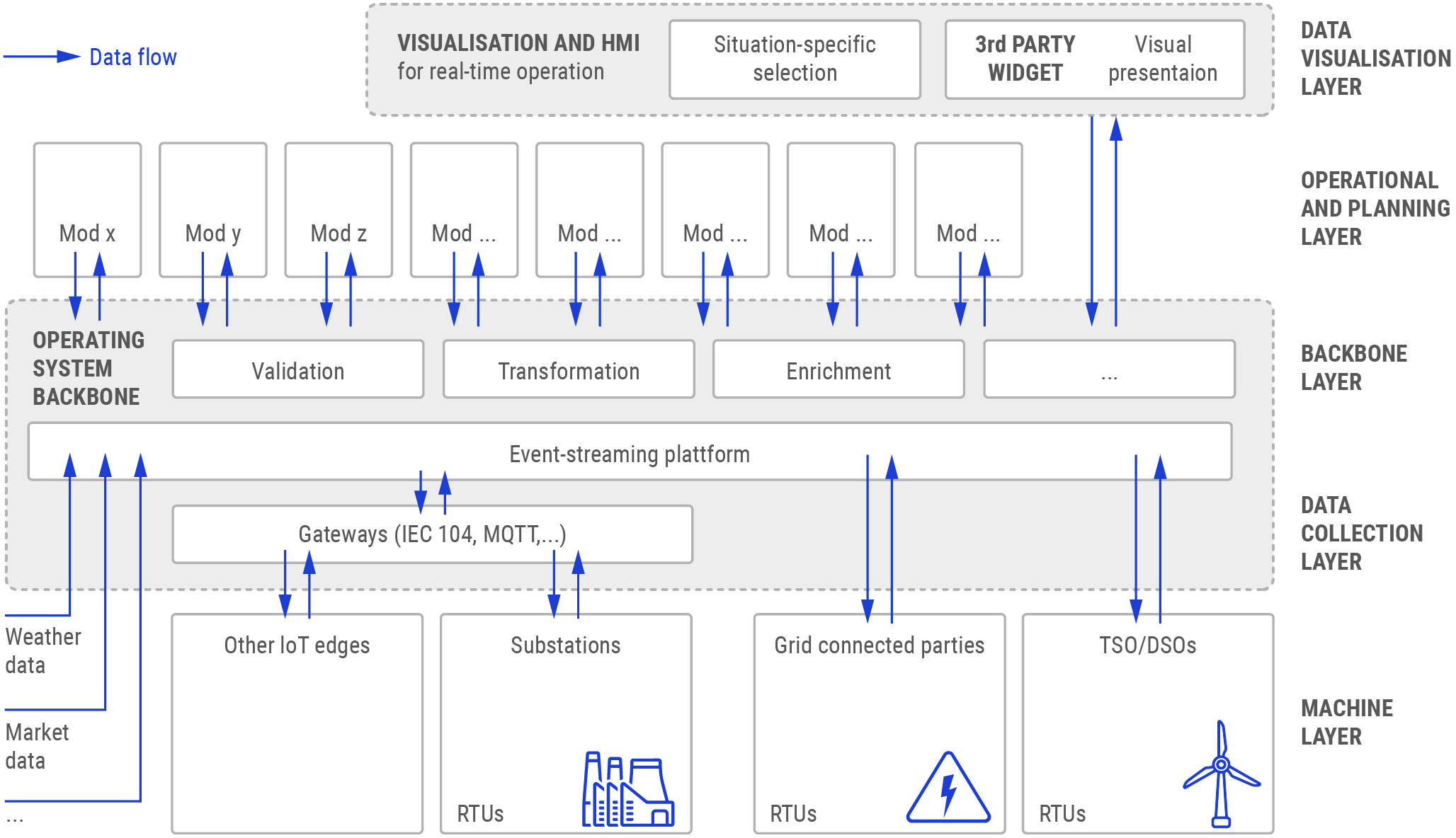

Therefore, the IT architecture of such a concept can look similar to the structure in the diagram below [1]:

- Event and data-driven stream processing facilities for a fast and open event and data-driven integration at the core.

- Harmonised master data management (MDM) based on Common Grid Model Exchange Specification (CGMES) standards as a single point of truth.

- Harmonised UIs based on micro-frontend pattern for a consistent user experience.

A guiding principle for the modular IT infrastructure for control centres is the division of previously monolithic/vertical applications into modules within well-defined edge contexts that can work together on an event-by-event basis but can be developed and improved separately.

Disaggregation takes place where it makes sense functionally and where there is strong potential for further use of modules, or where vertical consolidation would prevent other modules from applying relevant data that are otherwise only available within monoliths.

Modules can be leveraged to become “shared services” within an instance, whereby a time series or event archive originally provided by a single-vendor supervisory control and data acquisition (SCADA) system could be multiply reused to store other time series and event data from and to other modules.

The flow diagram below illustrates an example of a collection of modules that – when combined – encompasses full SCADA functionality [1]:

The vertically integrated SCADA is disaggregated into complementary modules such as data acquisition, monitoring, alarming, control, logging, etc. A few shared components including MDM, event streaming platform, and UI provide fundamental capabilities to integrate modules at the data, event, and frontend levels.

Streaming platforms can be developed in a modular way to achieve the following benefits:

- Compliance with the TSO’s contribution to the area/national/European decarbonisation trajectories: a modular system allows implementing current and future business requirements. Thanks to the novel development approach involving the TSO legacy expertise and innovative event-based IT architectures, they contribute to the 2050 decarbonisation trajectory.

- Reducing the total cost of ownership: the potential of data-driven platforms together with a modular setup results in the significantly improved efficiency of IT development processes (see table below for the tasks).

- Management of the IT tool development: modularisation of monolithic legacy solutions is also key to optimising the burden of system development and expansion, while remaining agile, i.e. independent modules and functions can be integrated or replaced one by one independently, increasing the speed and agility of developing new solutions. Furthermore, a security-by-design approach maintains or even improves reliability and reduces the impact of errors since security requirements are embedded into every phase of the product lifecycle from development to operation and decommissioning.

| Past IT development tasks | Disruptive IT development process | Impact on process productivity |

|---|---|---|

| Slow pace to reach new IT solutions | Shorter implementation cycles | Smaller and faster deliverables at lower costs |

| Black box approach by suppliers | Continuous development with intermediate validations and consequently refinement by system operation | Internal IT solution ownership and immediate improvements |

| Mainly global changes accepted by suppliers | IT tool updates focused on a per-function basis | Smaller next-level changes, lower development risks |

| Costly upgrade efforts to be validated at a single date | Continuous integration & engineering efforts, enabling fast incremental deliveries | Clearly demarcated requirements and more granular breakdown of providers’ tasks |

| Maintenance of multiple data sources and synchronization issues | Single source of truth, all modules use one single data model, harmonised user UI | One UI, smaller training effort |

| Vendor lock-in | Functions and modules can be replaced one by one | Symbiotic modules from multiple providers |

Table: Impact on process productivity of disruptive IT development processes.

Current Enablers

The above-described solutions comprise software subsystems that perform real-time computation on streaming event data. They execute calculations on unbounded input data continuously as they arrive, enabling immediate responses to current situations and/or storing results in files, object stores, or other databases for later use. Industrial examples of applications include images, sensor data from physical assets, manufacturing machines, and vehicles. An in-depth review of the technical features of such tools is provided in [2].

The commercial offer for energy streaming platforms from world-based vendors is covered and compared in [3].

The incremental development process allows taking advantage of the TSO legacy software tools.

Corresponding standards:

- IEC 60870-5-104 [5] part 5 is one of the IEC 60870 set of standards that define systems used for telecontrol (SCADA) in electrical engineering and power system automation.

- MQTT is an Organization for the Advancement of Structured Information Standards (OASIS) standard messaging protocol for the Internet of Things (IoT). It is designed as a lightweight publish/subscribe messaging transport for connecting remote devices with a small code footprint and minimal network bandwidth.

- Harmonised MDM derived from common information model (CIM)/CGMES standards as a single point of truth.

R&D Needs

R&D efforts focus on developing new “products” as described extensively in [6].

The technology is in line with milestone “Development of near-realtime platforms for dynamic system simulations (e. g. for DSA)” under Mission 3 and milestone “EU standardised data exchange protocols and ICT platforms” under Mission 4 of the ENTSO-E RDI Roadmap 2024-2034.

TSO Applications

Examples

| Location: Europe | Year: 2023 |

|---|---|

| Description: The MCCS by 50 Hertz (Elia Group) aims to completely replace the 50Hertz control centre system and all its applications for supervisory control (SCADA), grid calculations (energy management system (EMS)), LFC, scheduling, etc., instead providing those capabilities and functionalities in a modular way. | |

| Design: For reference, see the diagram below [7].

The base layer is the Elia Group Digital Platform (EDP), which provides basic infrastructure, data, service, and interaction technologies for the functions and applications to the above two layers. The middle layer is called the functional platform grid, asset and system platform (GrASP). It provides basic functionalities and processes such as logging, data management, time series, alerting, and UIs. The top layer shapes four applications relevant to grid operation that have already been developed: grid monitoring, grid control, grid calculation, and voltage regulation. The new possibilities that this opens for system control are already demonstrated by MCCS in its current development stage. | |

| Results: The modularisation introduced prevents the development of monolithic applications. Each product or module provides only its core functionality, receiving and transforming data and returning results. This offers support for identifying overlapping capabilities along different value chains. | |

Technology Readiness Level The TRL has been assigned to reflect the European state of the art for TSOs, following the guidelines available here.

- TRL 8 for successful full-scale demonstration in the European grid environment performed piecewise in parts of the 50 Hertz network [1].

References and further reading

50 Hertz, “Market Sounding SCADA/EMS”, White paper, 2023

I. Haruna et al., “A Survey of Distributed Data Stream Processing Frameworks”, IEEE Access, vol. 7, p. 154300, 2019.

Gartner, “Event Stream Processing Reviews and Ratings”, Gartner

M. Pracht, R. Heisig, “MCCS (Modular Control Center System) – Designing a Future-oriented Control Center System For a Successful Energy Transition”, 2022

IEC, “60870-5-104,” 2006.

A. Marot et al., “Perspectives on Future Power System Control Centers for Energy Transition”, Journal of Modern Power Systems and Clean Energy, vol. 10, no. 2, p. 328, 2022.

M.Pracht “Vom Block zum Baukasten”, 2024. Available: Vom Block zum Baukasten - 50komma2

ENTSO-E

ENTSO-E